RIS Compute 103¶

Note

Connecting to get command line access: ssh wustlkey@compute1-client-1.ris.wustl.edu

Queue to use: workshop, workshop-interactive

Group to use: compute-workshop (if part of multiple groups)

Compute 103 Video¶

What will this documentation provide?¶

A beginners understanding of how to create commands and submit jobs in the RIS Compute Environment.

Hurdles to Using an HPC Environment¶

A lack of Unix/Linux CLI knowledge (This can be solved by reading RIS Compute 101 and 102).

Using a job queue system.

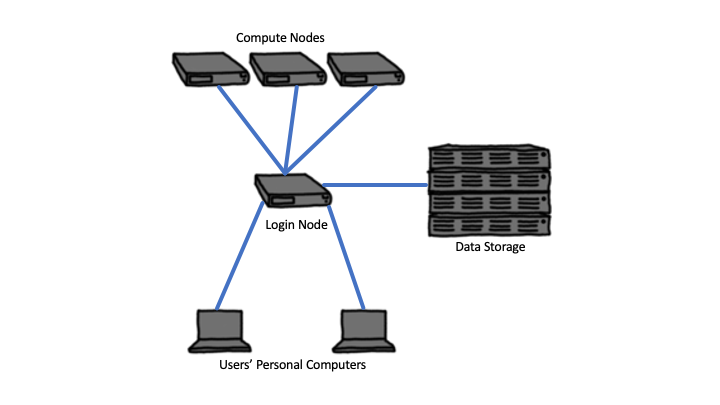

A Representation of What an HPC Cluster Looks Like¶

Problems a Queue System Addresses¶

The need to manage hundreds to thousands of jobs.

The decision of which nodes to run jobs on.

Balancing the needs of a diverse set of users.

Provide the tools necessary to make life easier for users.

Batch Scripts and Shell Scripts¶

In a traditional HPC environment, the Batch script tells the system how to process your data.

In the RIS Compute Environment this is NOT the case.

However, you can emulate this with a shell script.

Running Jobs in the RIS HPC Environment¶

First we need to connect to the server as demonstrated previously.

ssh wustlkey@compute1-client-1.ris.wustl.edu

Then we can run our first command.

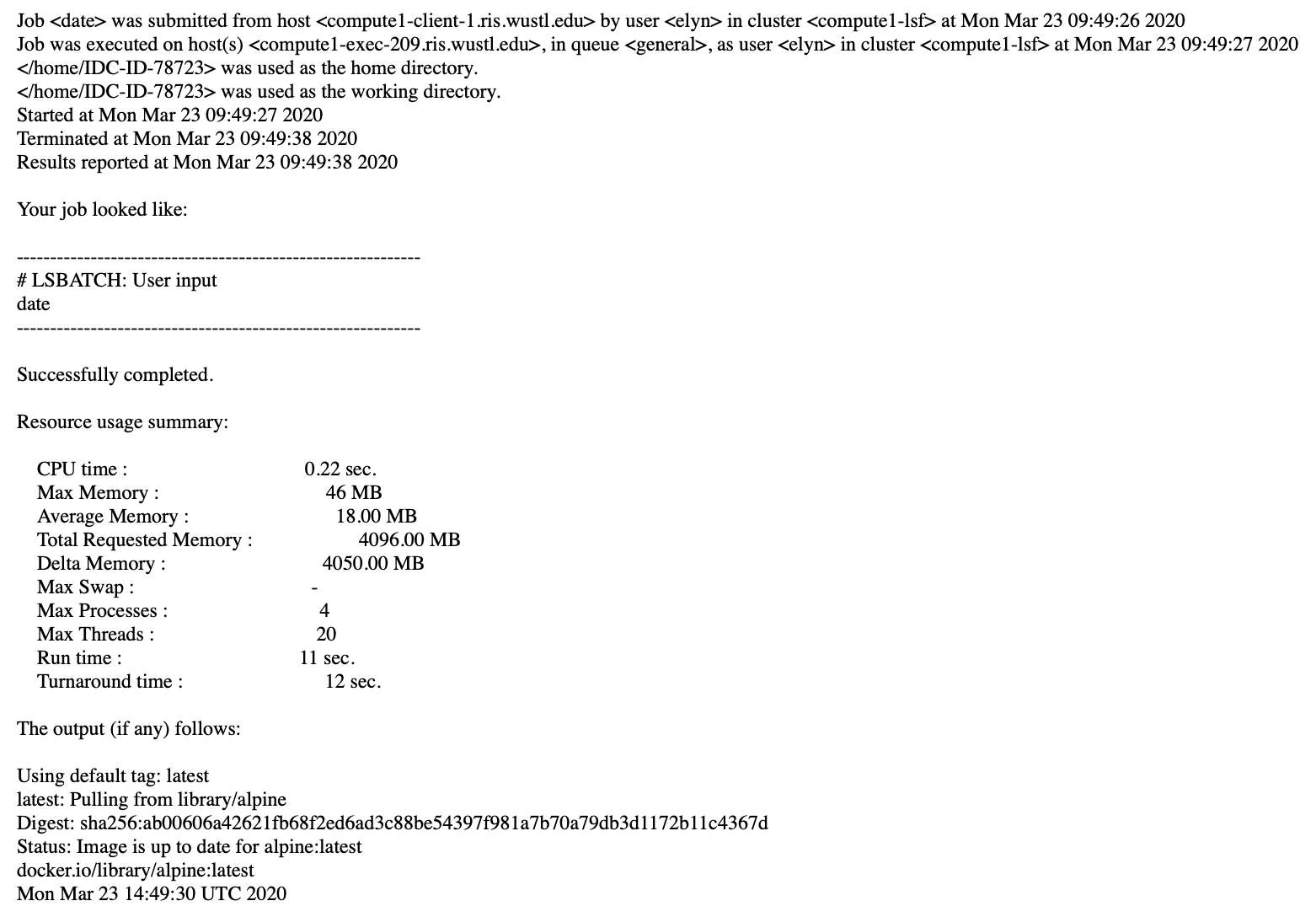

bsub -G compute-workshop -q workshop -a 'docker(alpine)' date

This command runs a docker image that has the alpine version of Linux as a basis and we are telling it to run the date command.

You will receive an email when the job completes with the results of the job.

You can run any job interactively,

-lsoption, so that you have access to the docker image via CLI.

bsub -Is -G compute-workshop -q workshop-interactive -a 'docker(ubuntu:bionic)' /bin/bash

You can use the base Linux docker images that contain nothing but the OS, or you can use a docker image that has been created for a software that you want to utilize.

There are environment variables that you can use to tell the docker image information you want to use in the job. These are kind of like the

#PBSor batch system arguments.These are called LSF arguments and the documentation on them can be found here.

The

bsubcommand submits a job as we have seen.The

bjobscommand provides a list of the jobs that are running.The

bkillcommand kills a job in the queue.

The

bqueuescommand shows what queues are available.

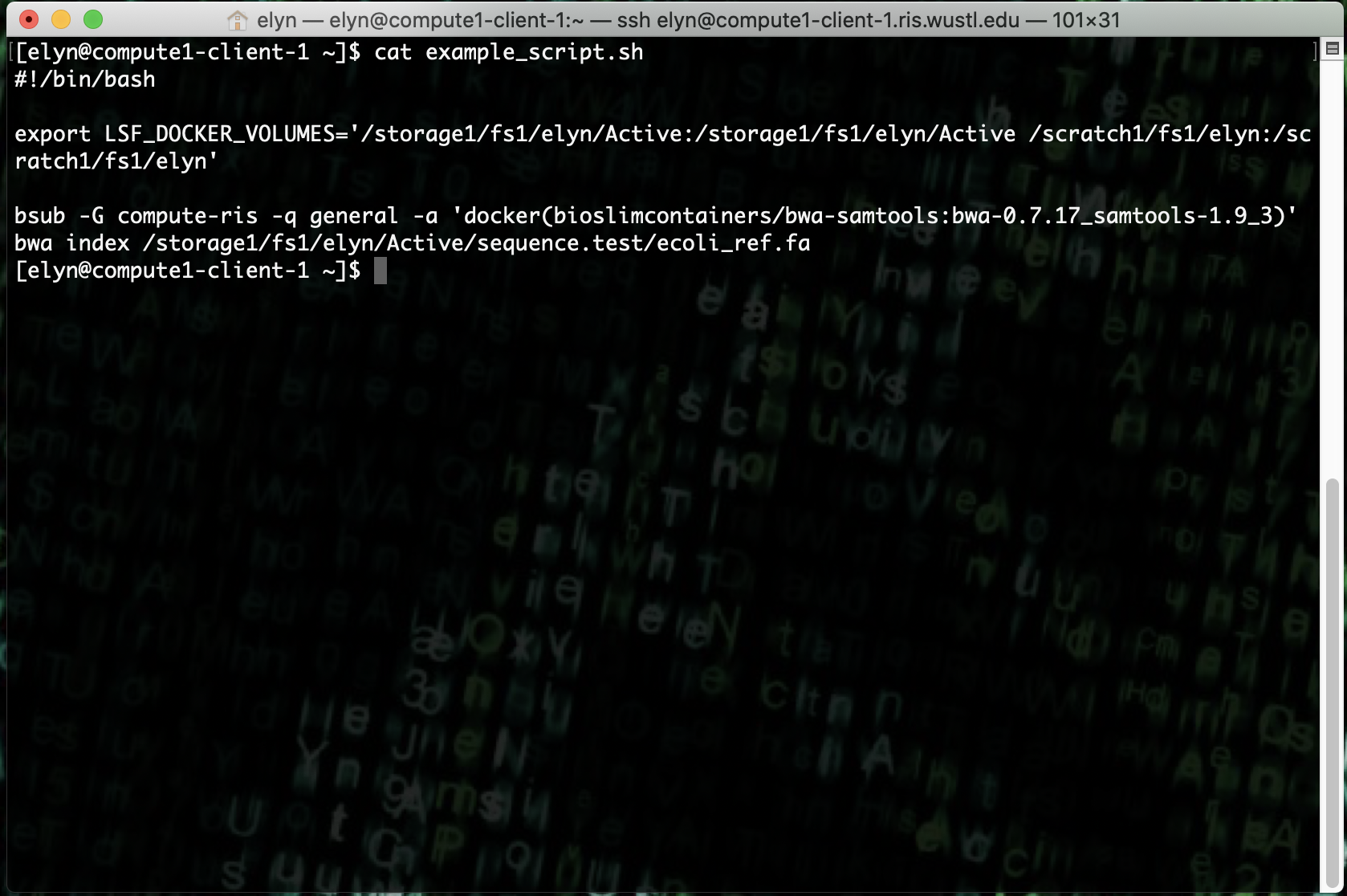

We can use a shell script to emulate the batch type of script. In the example below, we make use of one of the LSF variables so that we can run a job with data. (We will go more in depth into the LSF variables and running more complex jobs in RIS Compute 104).

The particular LSF variable we’re using here, tells the docker script to mount, or make accessible, the storage and scratch directories associated with our account so that we can access the files contained within them.

This part is similar to the PBS parameters that are set in a traditional HPC environment.

The script also contains our command we wish to run, which uses the

bsubcommand and a docker image.This particular image contains bwa, which we are using in this example to index an ecoli reference that we’ve downloaded.

Once we have the script created, we can run it just like the shell scripts that were created previously.

Now the job has been submitted and is running per the information we gave both the LSF environment and the actual software command.

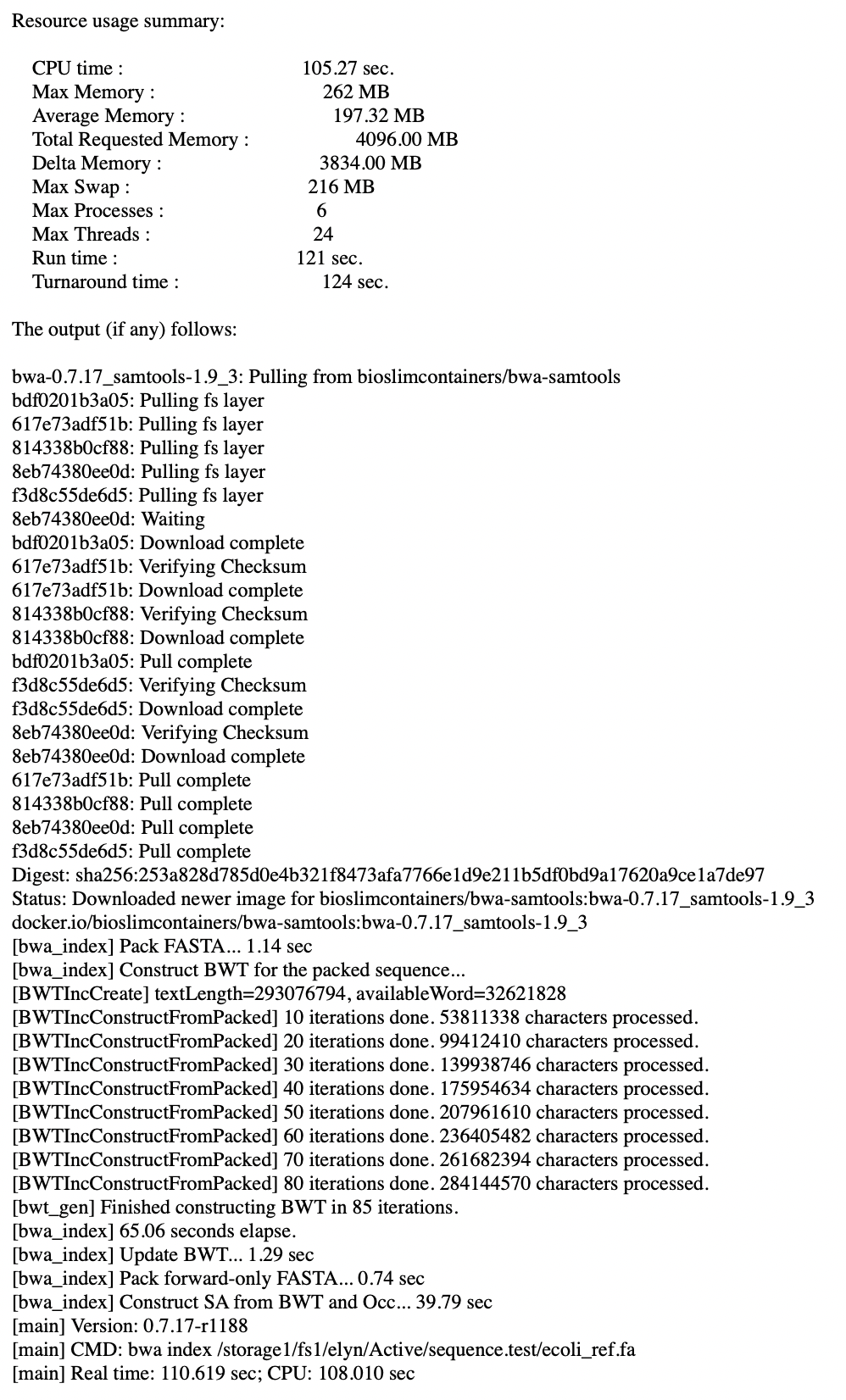

Once completed, an email will be sent as to the run completion of the job.

Here we can see that the bwa index command ran to completion. And if we check our storage directory, we will see the files we expect.