RIS Compute 104¶

Note

Connecting to get command line access: ssh wustlkey@compute1-client-1.ris.wustl.edu

Queue to use: workshop, workshop-interactive

Group to use: compute-workshop (if part of multiple groups)

Compute 104 Videos¶

What will this documentation provide?¶

A more in depth knowledge of utilizing the RIS Compute Environment for analysis via more complex use of the LSF variables and docker images.

More with LSF Environment Variables¶

The more common LSF variables that will be most useful are the

LSF_DOCKER_VOLUMES,LSF_DOCKER_NETWORK, andLSF_DOCKER_IPCvariables.LSF_DOCKER_VOLUMES- This passes filesystem locations to the docker command, which will then mount them so that the docker image has access to them.This can be done to mount storage and scratch as well as other accessible directories.

LSF_DOCKER_NETWORK- This tells the docker image what network to use and will most likely utilize ‘host’. This is necessary for parallel computing.LSF_DOCKER_IPC- This makes use of docker’s–ipcargument and is useful for parallel computing, in particular MPI applications.

Further bsub Arguments¶

There are more

bsubarguments than what has been demonstrated to this point.The

-nargument is used to tell the docker image how many processors to appropriate.The

-Rcommand takes a string input and will most likely be utilized to increase memory usage.The

-Jcommand takes a string and it creates a job array for handling multiple similar jobs. The maximum allowed in a job array is 1000.

Combining This Information Into a More Complex Job¶

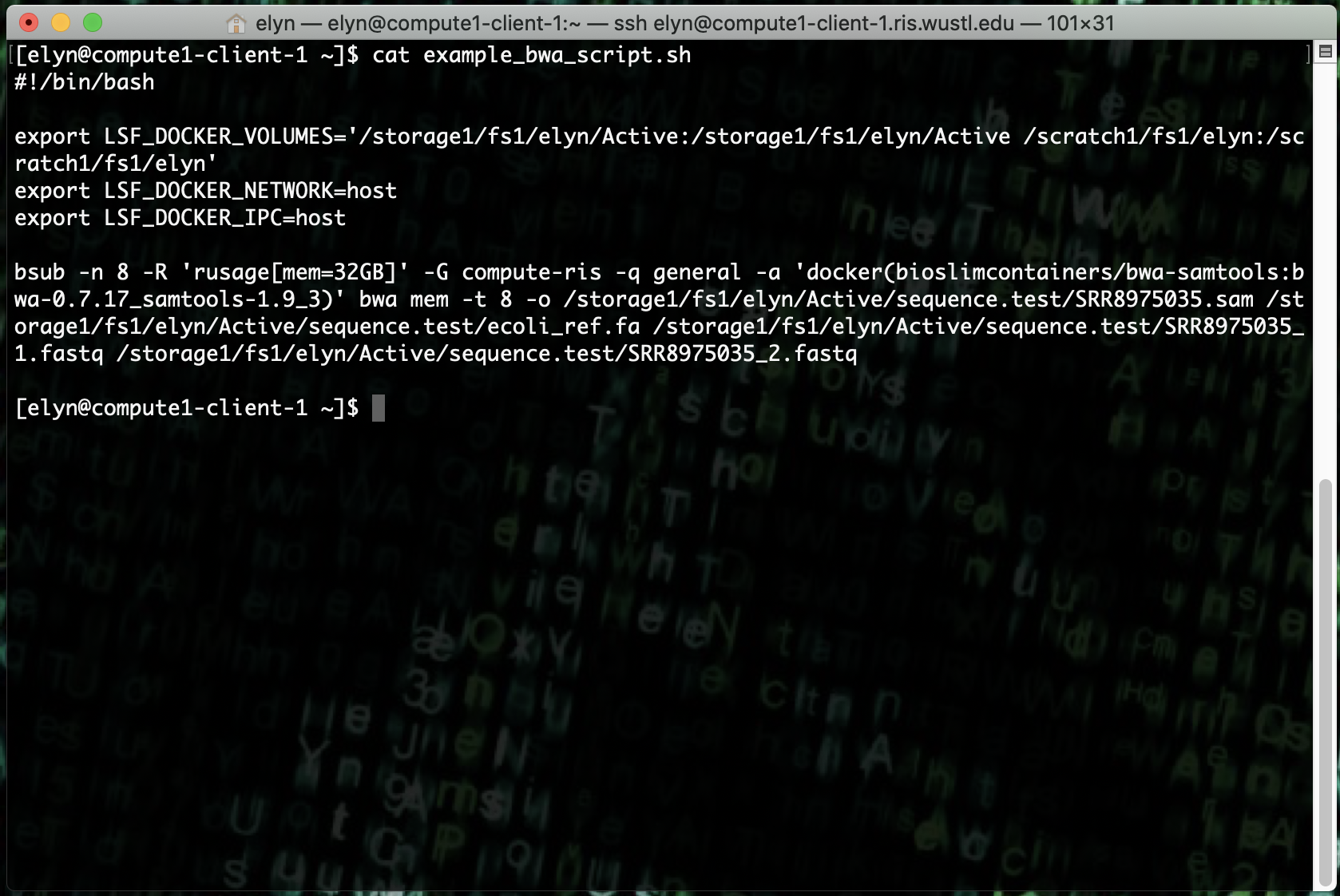

If we combine this information, we can run a more complex job, like an alignment job that requires multiple cores.

For the aligner, we’ll say we want to give it 4GB of ram per processor and utilize 8 processors, making the total amount of ram we need, 32GB.

#!/bin/bash

export LSF_DOCKER_VOLUMES='/path/to/storage:/path/to/storage /path/to/scratch:/path/to/scratch'

export LSF_DOCKER_NETWORK=host

export LSF_DOCKER_IPC=host

bsub -n 8 -R 'rusage[mem=32GB]' -G compute-workshop -q workshop -a 'docker(bioslimcontainers/bwa-samtools:bwa-0.7.17_samtools-1.9_3)' bwa mem -t 8 -o /path/to/output.sam /path/to/reference.fa /path/to/reads_1.fastq /path/to/reads_2.fastq

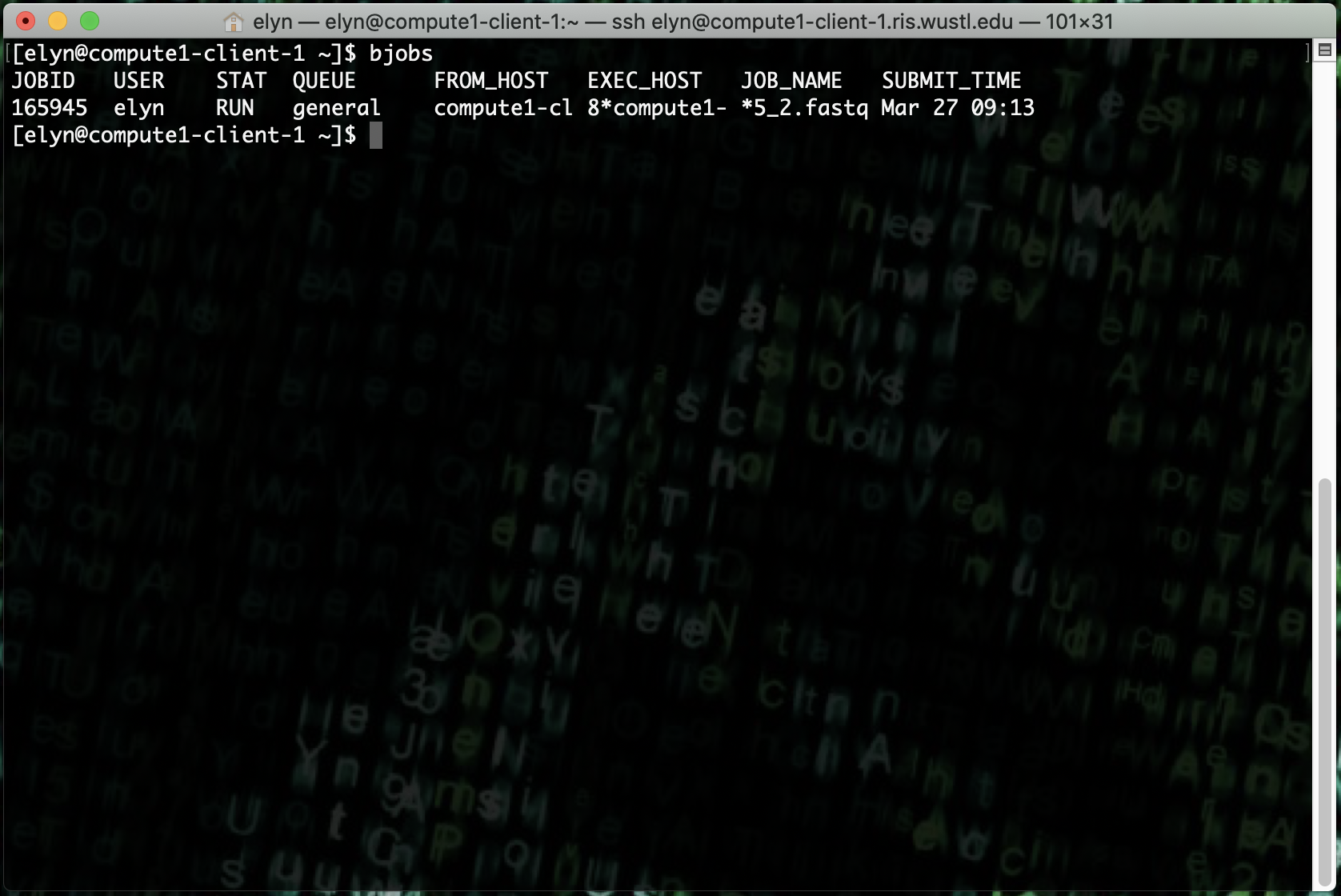

Once we submit the above job, we can see it running in queue.

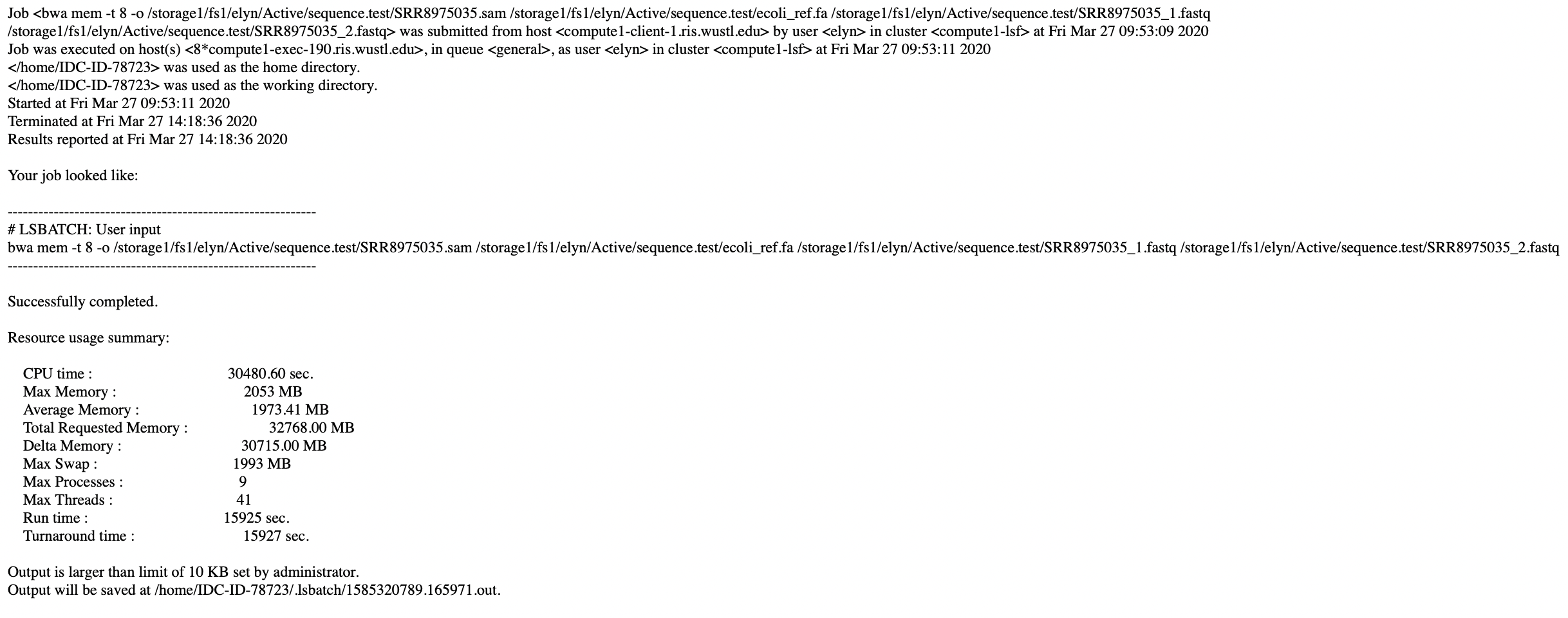

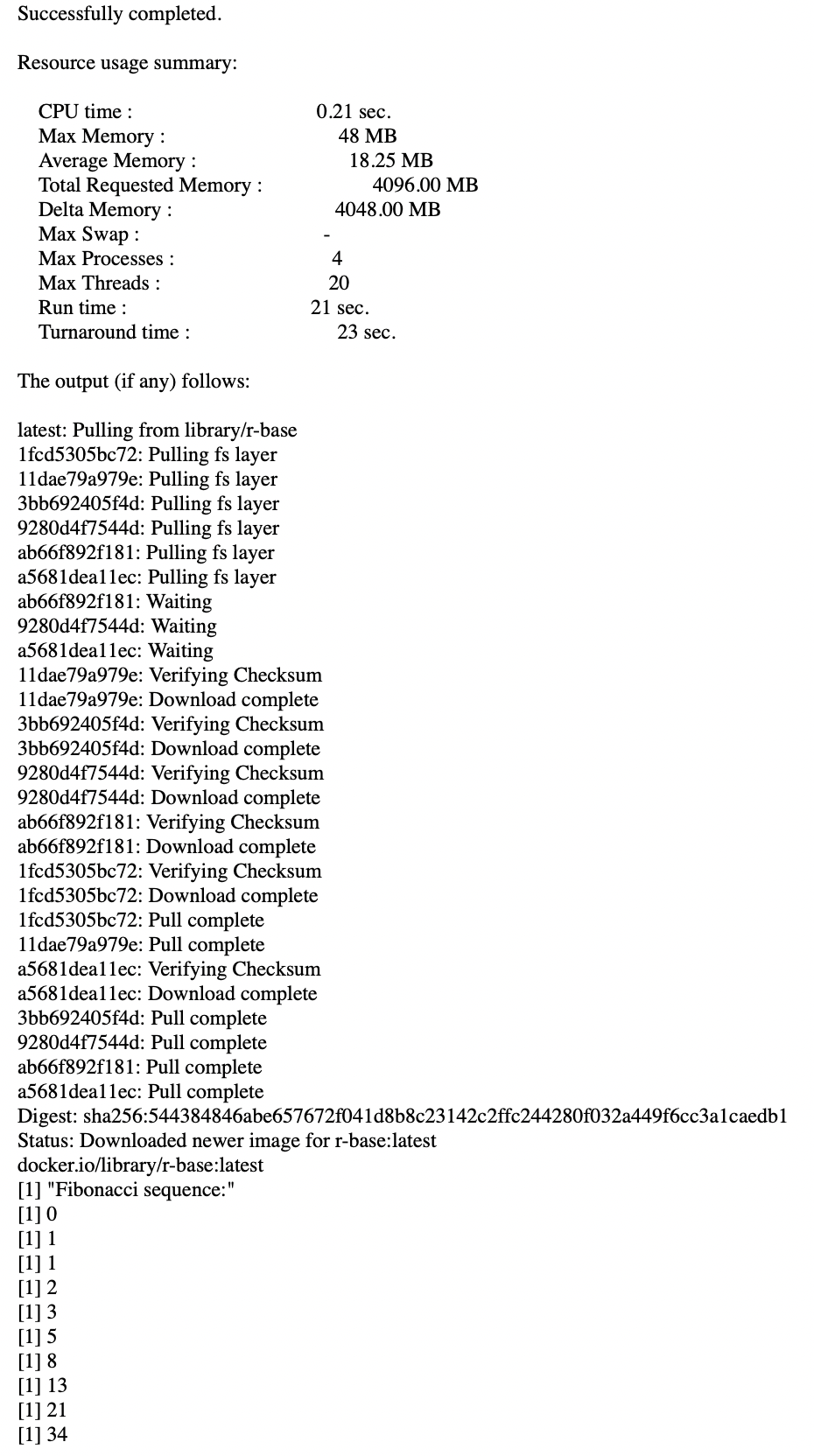

And when the job is finished we get an email.

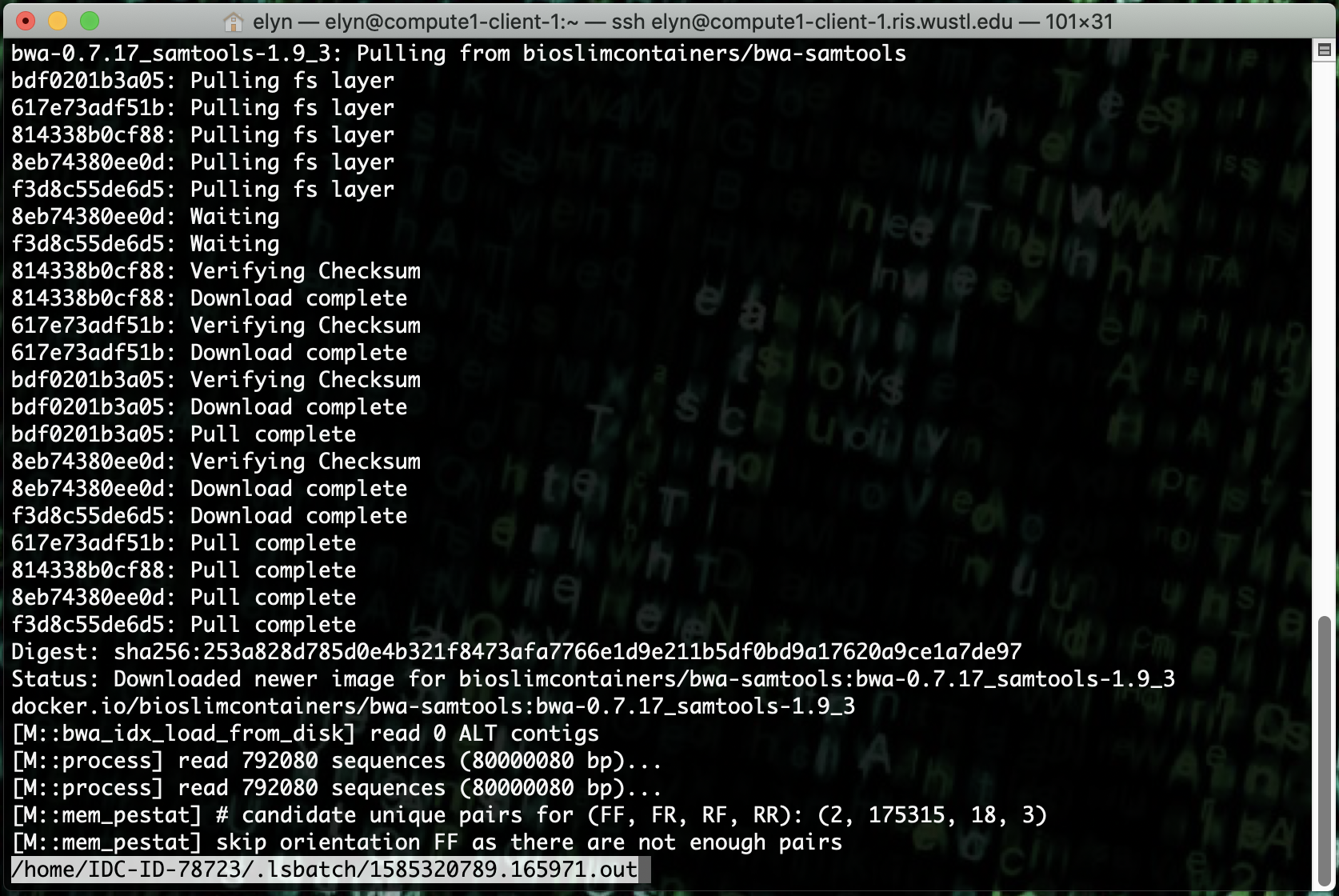

Here we can see that the output to standard out is more than 10 KB so it has put the output in a file for us.

As you can see we have the standard output from a bwa job that would be expected.

And the sam file generated is in the directory where we specified.

An Interactive Job Example¶

We can also run interactive job that’s more complex. In this example we will be using R.

We can start an interactive job with an R docker with the following commands.

export LSF_DOCKER_VOLUMES='/path/to/storage:/path/to/storage /path/to/scratch:/path/to/scratch'

bsub -Is -G compute-workshop -q workshop-interactive -a 'docker(gcr.io/ris-registry-shared/rstudio:latest)' /bin/bash

We will be running an R script that prints out X terms of the fibonacci sequence where X is an integer input by the user in the script.

This type of job can also be ran as a non interactive job by changing up the bsub command slightly.

export LSF_DOCKER_VOLUMES='/path/to/storage:/path/to/storage /path/to/scratch:/path/to/scratch'

bsub -G compute-workshop -q workshop -a 'docker(r-base:latest)' Rscript /path/to/script

An Interactive GUI Job Example¶

Besides the regular interactive type jobs, you can run jobs that utilize software like novnc, to create servers that you can log into from a browser to create a GUI environment.

In this example we will be starting up a docker environment that has a log of software already installed.

When you start this job up, it will give you a url to go to via a web browser to access the instance. It will also give you a password to use to access the GUI environment from the web browser.

You can also set the password yourself by using the VNC_PW variable before the command.

export LSF_DOCKER_PORTS='8080:8080'

export PASSWORD=

bsub -Is -R 'select[port8080=1]' -q general-interactive -a 'docker(gcr.io/ris-registry-shared/novnc:<tag>)' supervisord -c /app/supervisord.conf

Please see our documentation for more information on selecting a port.

Note

If you are a member of more than one compute group, you will be prompted to specify an LSF User Group with -G group_name or by setting the LSB_SUB_USER_GROUP variable.

The job will output the name of the blade it’s running on in the terminal.

<<Starting on compute1-exec-N.ris.wustl.edu>>

# translates to the IP address you enter into the web browser:

https://compute1-exec-N.compute.ris.wustl.edu:8080/vnc.html

The password will be what you set above.

You can change GUI display size by clicking on the settings icon on the left of the browser and selecting ‘Local Scaling’ from the Scaling Mode dropdown. Click the settings icon again to resume session.

The display height and width can also be changed by passing them as variables.

Once in the GUI environment, you can start up any of the software that were installed in the docker image.

You should also have access to your storage directory as long as you set the LSF_DOCKER_VOLUMES variable.

As you can see here, by use of the terminal, we can see the storage has been imported into our GUI image.

And we can open up a GUI editor and pull those files directly into the software we wish to use.

When you are done with the session, as long as any data you generated was pushed to a mounted directory, you will have access to it outside of the container.

If you close the window, the job will not exit and if you want to go back into the session, you can simply open a web browser and enter the IP address and enter back into the VNC session.

To exit out of the job completely, you need to select Log Out from the Applications menu in the GUI.

It is important to use Log Out and not just close the browser if you no longer need the job as the job on the compute environment will keep running, tying up resources.

Jobs in the general-interactive queue are killed after 24 hours.

Jobs in the general queue run for 4 weeks.