Moving Data From Google Storage to RIS storage via gsutil on Compute1¶

What is this Documentation?¶

This documentation will cover doing file transfers with gsutil over our dedicated fiber interconnect in order to download data from sources that use Google storage.

storageN

The use of

storageNwithin these documents indicates that any storage platform can be used.- Current available storage platforms:

storage1

storage2

Quick Start¶

1. Login to the Compute platform¶

ssh wustlkey@compute1-client-1.ris.wustl.edu

2. Set up Google Account variable¶

export GOOGLE_ACCOUNT=wustlkey@wustl.edu

Account Information

This is the account that has been granted access to the data by the data owner.

This is not necessarily just an email address.

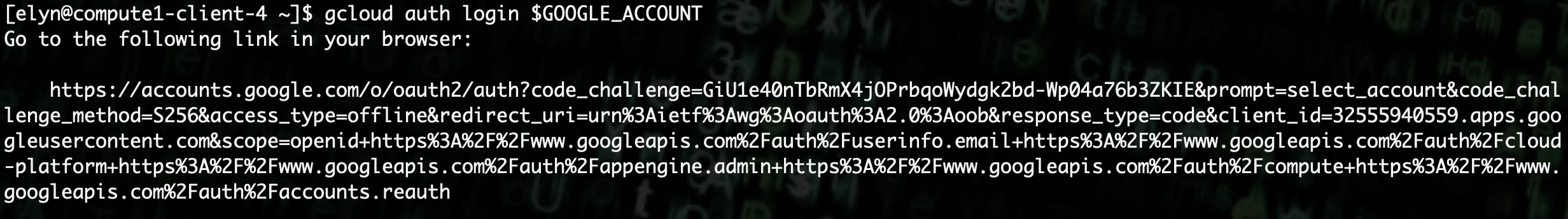

3. Login with gcloud¶

gcloud auth login $GOOGLE_ACCOUNT

Follow the URL that you are given.

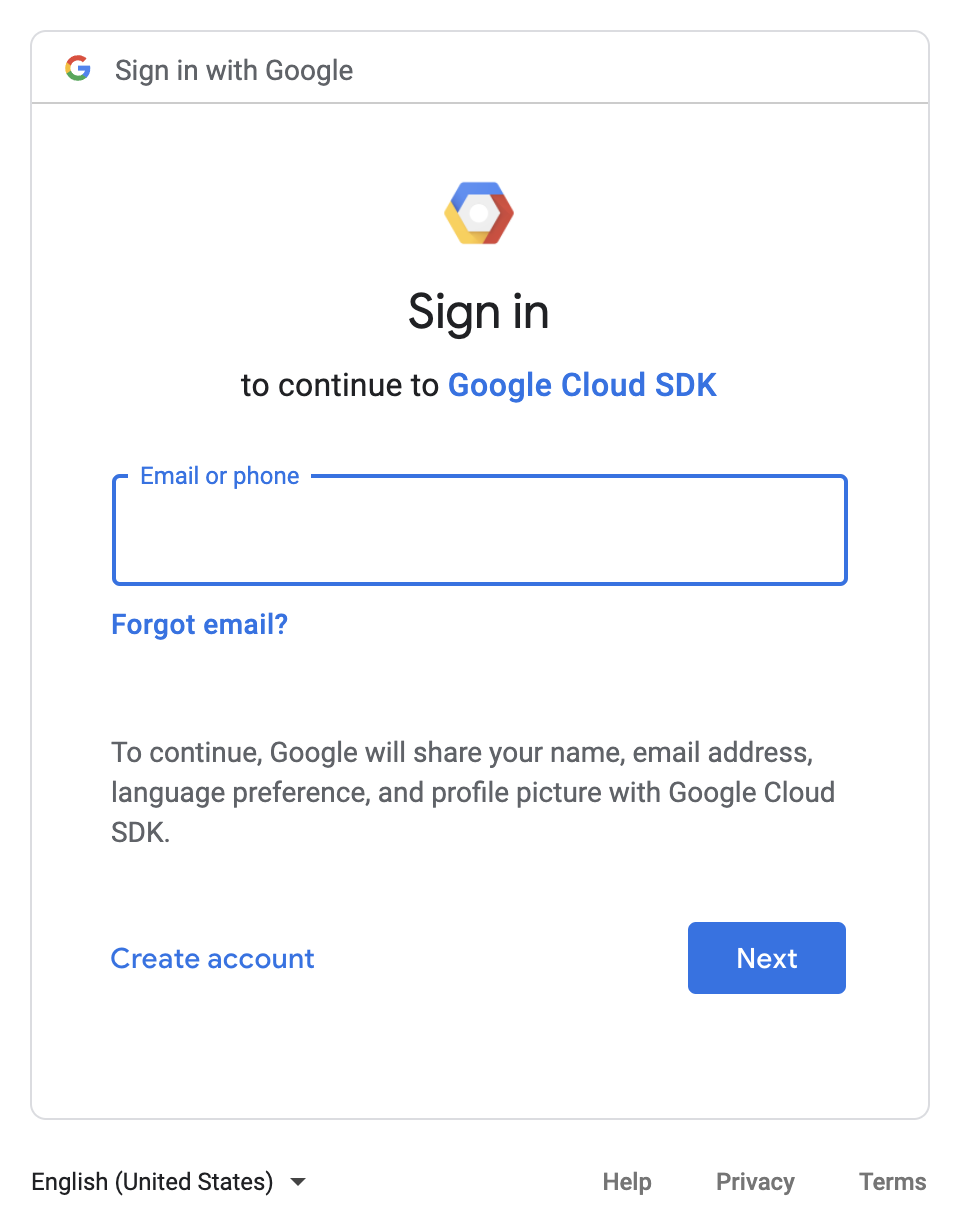

It will ask your to sign into Google Cloud SDK. Use the email granted permission by the data owner here, e.g. wustlkey@wustl.edu.

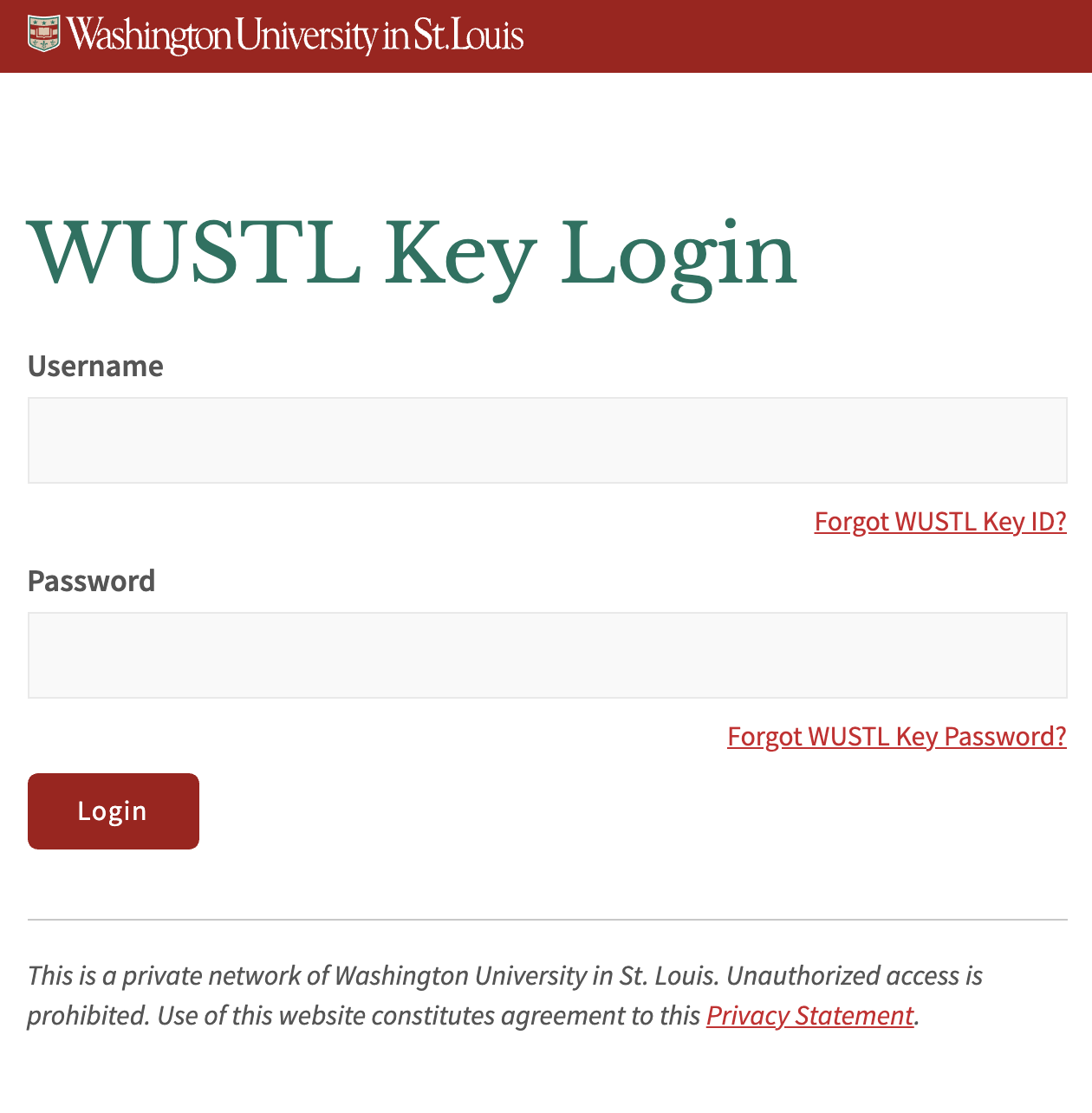

This will take you to a WashU authentication page if it’s your WashU email. Put in your information for the WashU sign in you normally would.

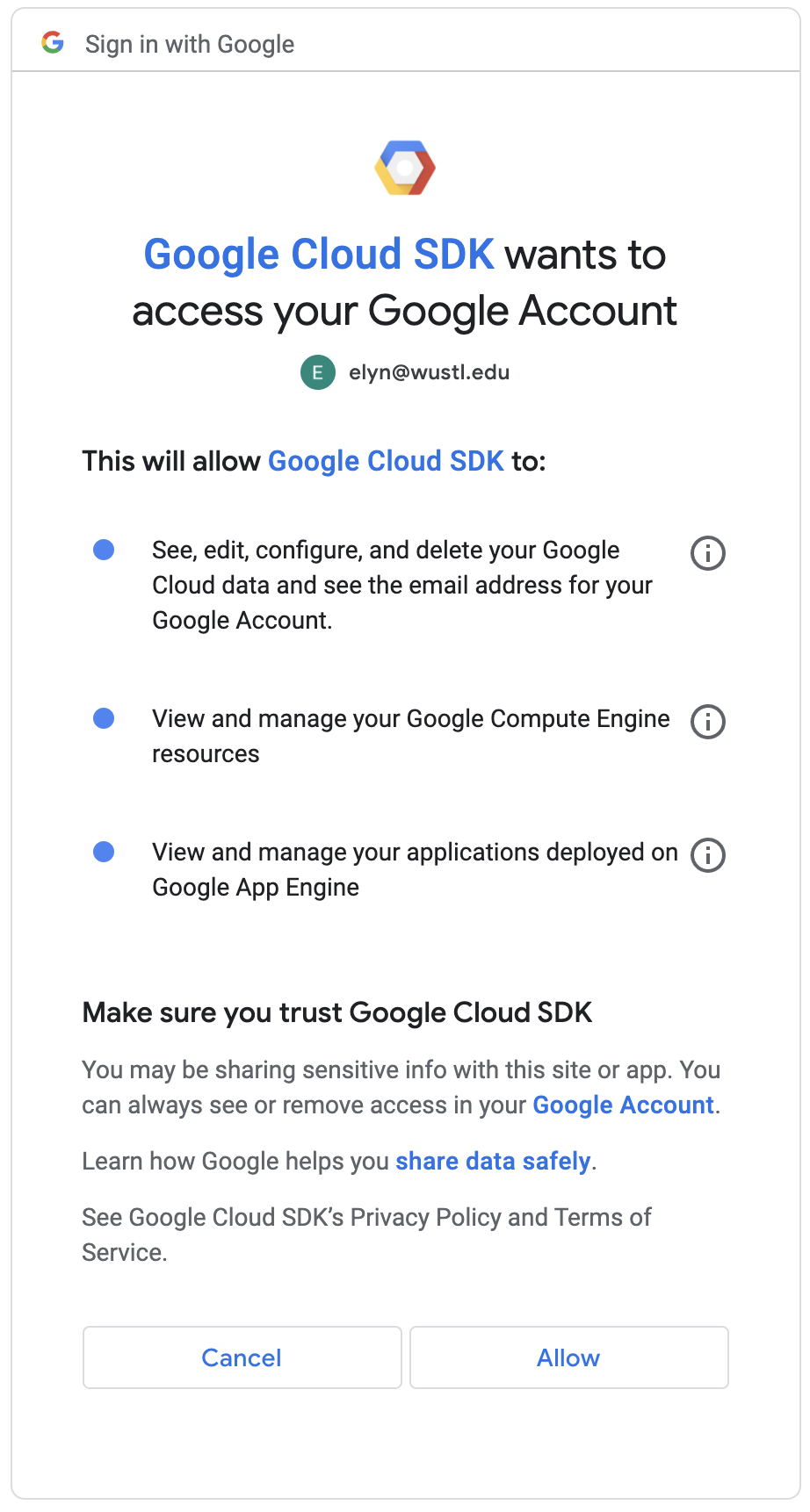

It will then ask for access to your account, click Allow.

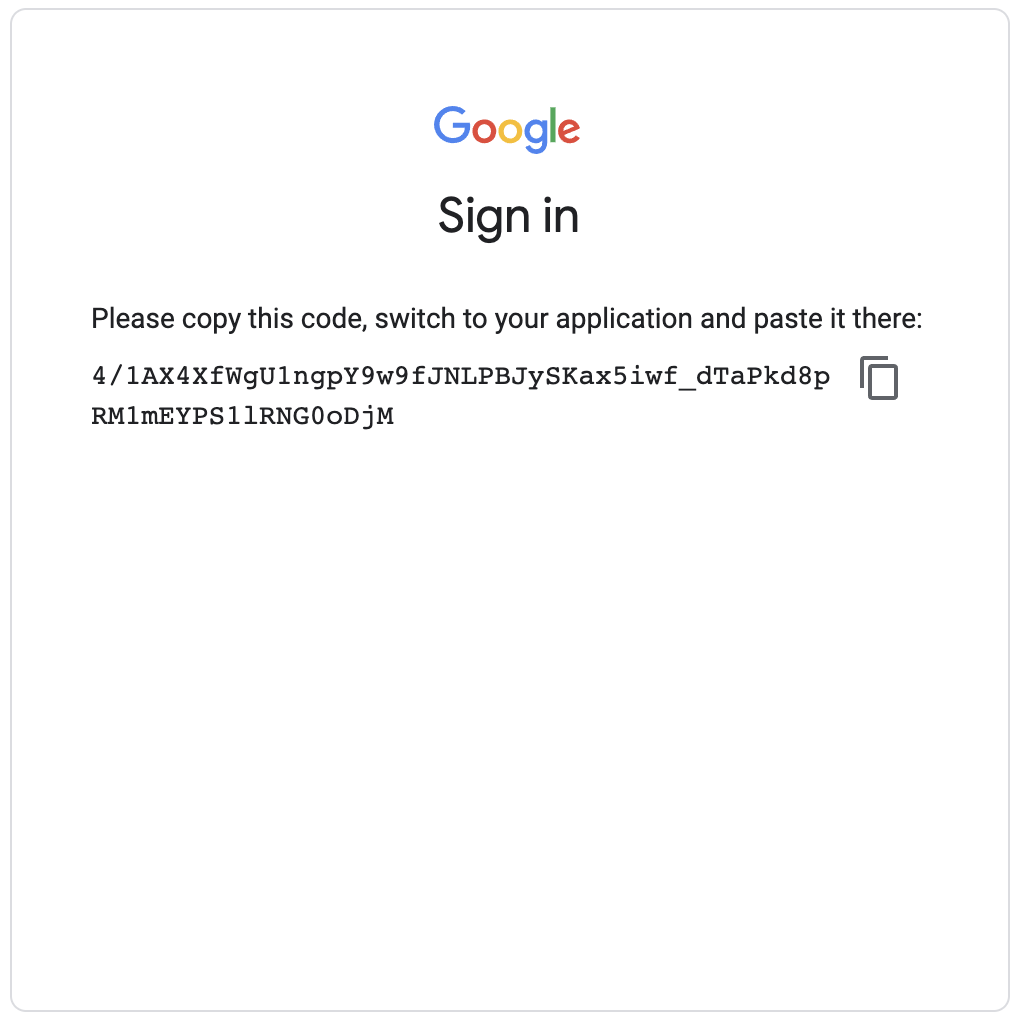

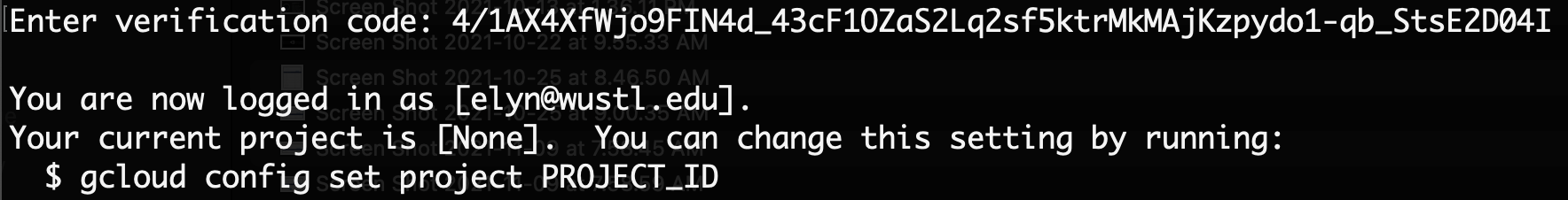

It will give you a code, you will need to paste this code back into the terminal you are working in.

You can confirm this worked with the following command.

..code:

gcloud auth list

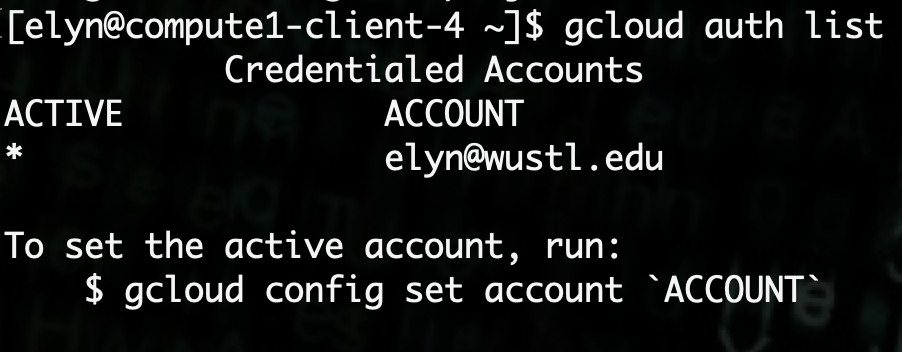

Multiple Accounts

If this account is the only account listed, it will by default be the “active” one.

If there are multiple accounts, you can use the following to set the one being used active.

gcloud config set account $GOOGLE_ACCOUNT

4. Transferring the Data¶

Set the following variables needed for the transfer.

export GOOGLE_STORAGE_PATH=gs://path/from/data/provider

export DESTINATION_DIR=/storageN/fs1/${STORAGE_ALLOCATION}/Active/path/to/directory

//path/from/data/provider

//path/from/data/provider is the location of the data on Google Storage, as provided by the group sharing the data.

You will need to set the following variables

export LSB_JOB_REPORT_MAIL=N

export LSF_DOCKER_VOLUMES="/storageN/fs1/${STORAGE_ALLOCATION}/Active/path/to/directory:/data"

export LSF_DOCKER_ADD_HOST=storage.googleapis.com:199.36.153.4

You need to launch a bsub job to use google cloud tools

bsub -Is -q general-interactive -a 'docker(google/cloud-sdk)' /bin/bash

You are a member of multiple LSF User Groups

If you are a member of more than one compute group, you will be prompted to specify an LSF User Group with -G group_name or by setting the LSB_SUB_USER_GROUP variable.

The following command will run a trial of the transfer to make sure it works.

gsutil rsync -r -n $GOOGLE_STORAGE_PATH /data/

Once the test is complete and there are no issues, run the transfer by removing the -n option.

gsutil rsync -r $GOOGLE_STORAGE_PATH /data/

If there are problems or the transfer locks up, you can safely restart the transfer without losing progress as it will continue from where it was stopped just like normal rsync.

If you are transferring a large number of small files, using parallel transfers may work better.

The following command will run a transfer in parallel.

gsutil -m -o GSUtil:parallel_composite_upload_threshold=150M -o GSUtil:parallel_thread_count=16 rsync -r $GOOGLE_STORAGE_PATH /data/

Warning

Using the parallel option is done so with the knowledge that it can be error prone.